Should Sklearn add new gpu-version for tuning parameters faster in the future? · Discussion #19185 · scikit-learn/scikit-learn · GitHub

Vinay Prabhu on Twitter: "If you are using sklearn modules such as KDTree & have a GPU at your disposal, please take a look at sklearn compatible CuML @rapidsai modules. For a

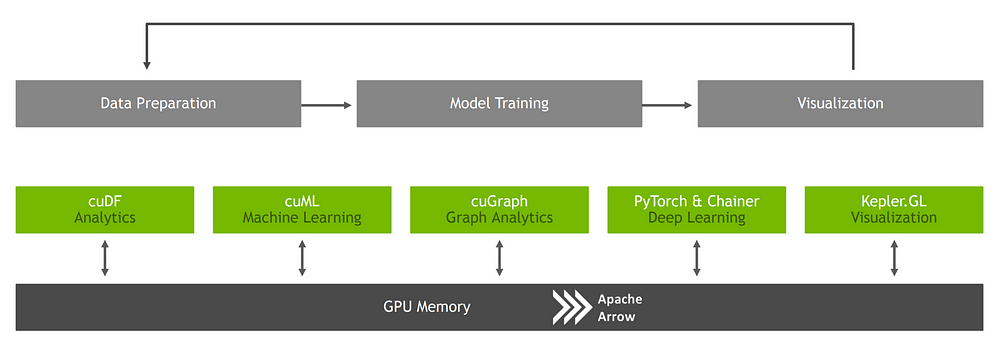

Boosting Machine Learning Workflows with GPU-Accelerated Libraries | by João Felipe Guedes | Towards Data Science

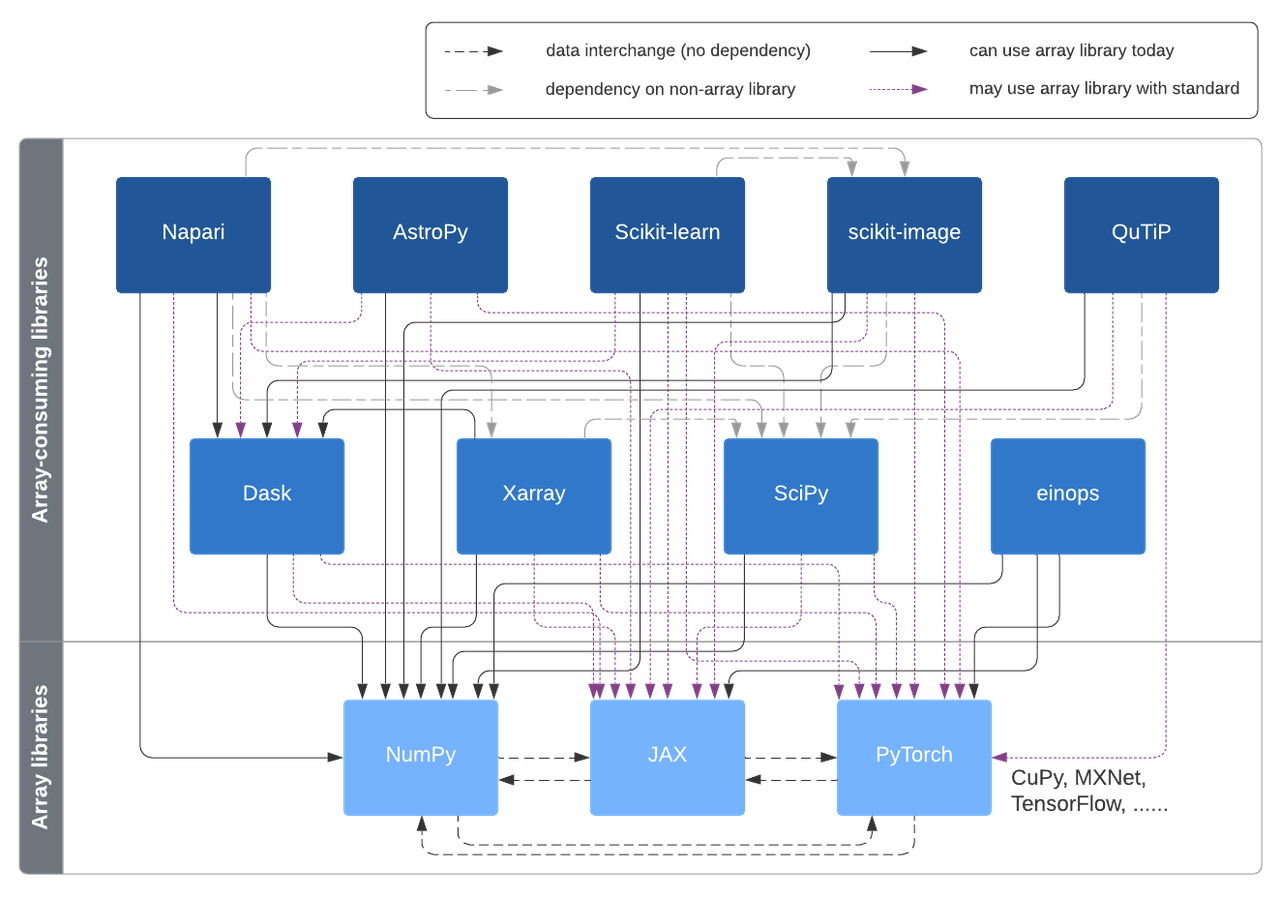

A vision for extensibility to GPU & distributed support for SciPy, scikit-learn, scikit-image and beyond | Quansight Labs

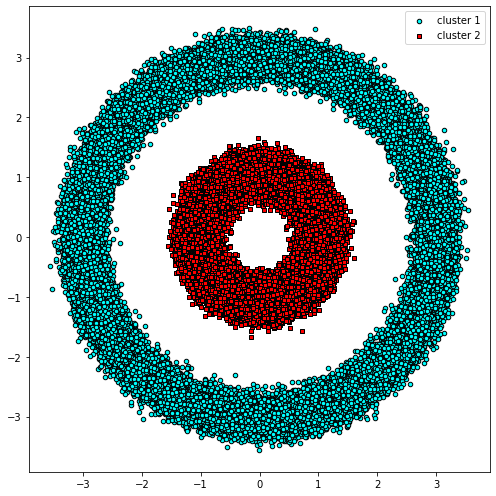

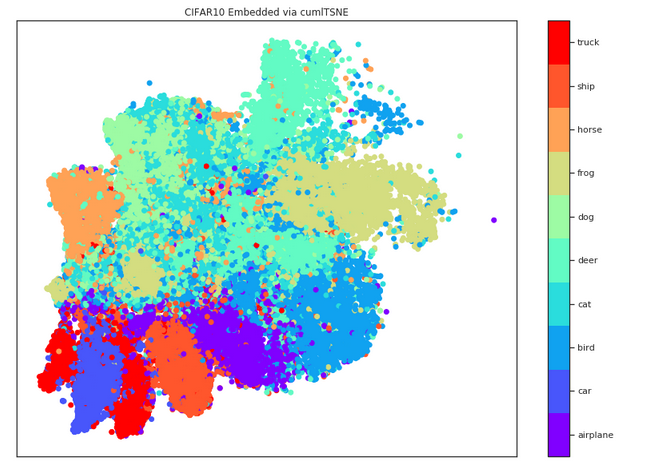

600X t-SNE speedup with RAPIDS. RAPIDS GPU-accelerated t-SNE achieves a… | by Connor Shorten | Towards Data Science

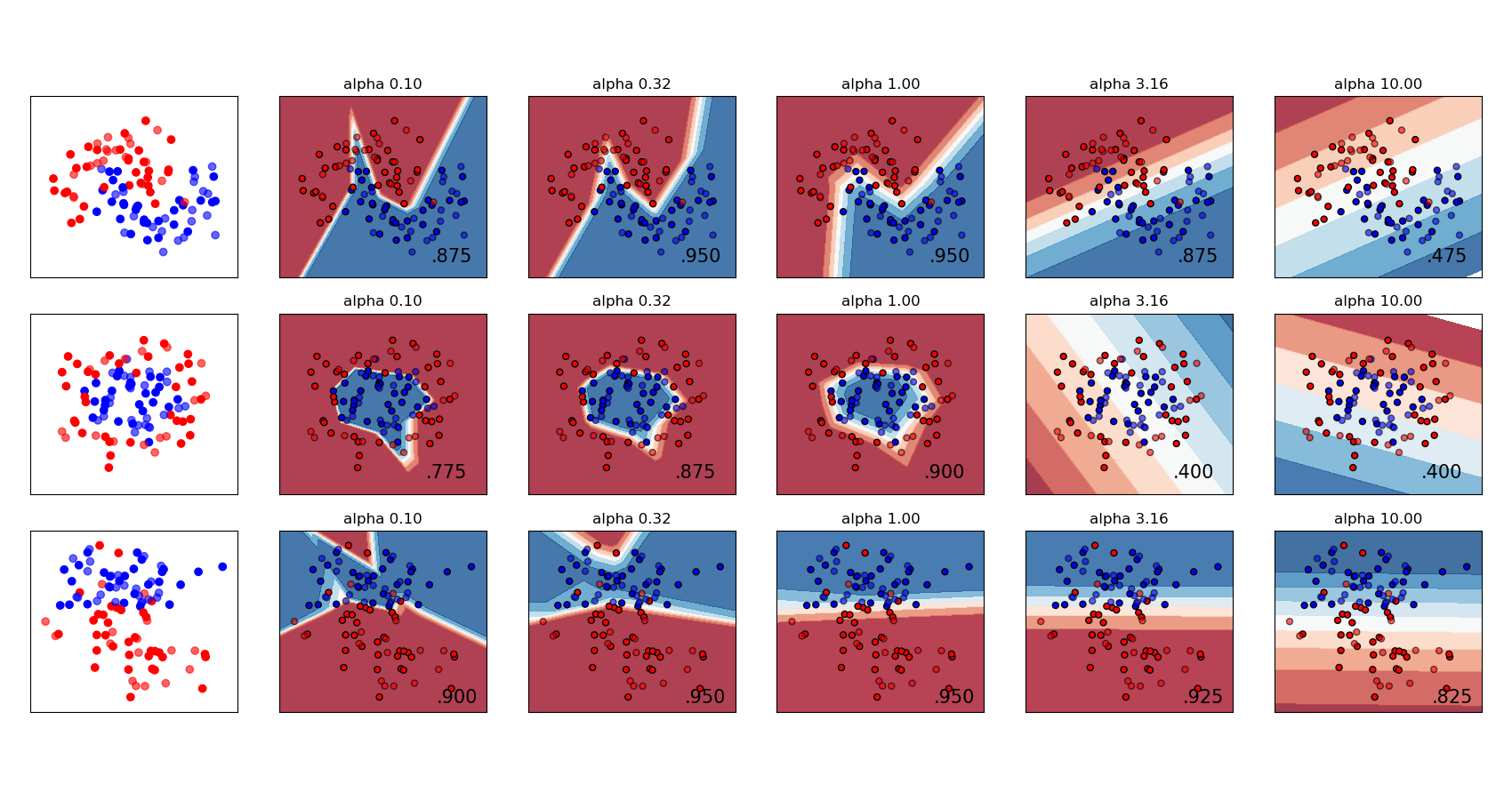

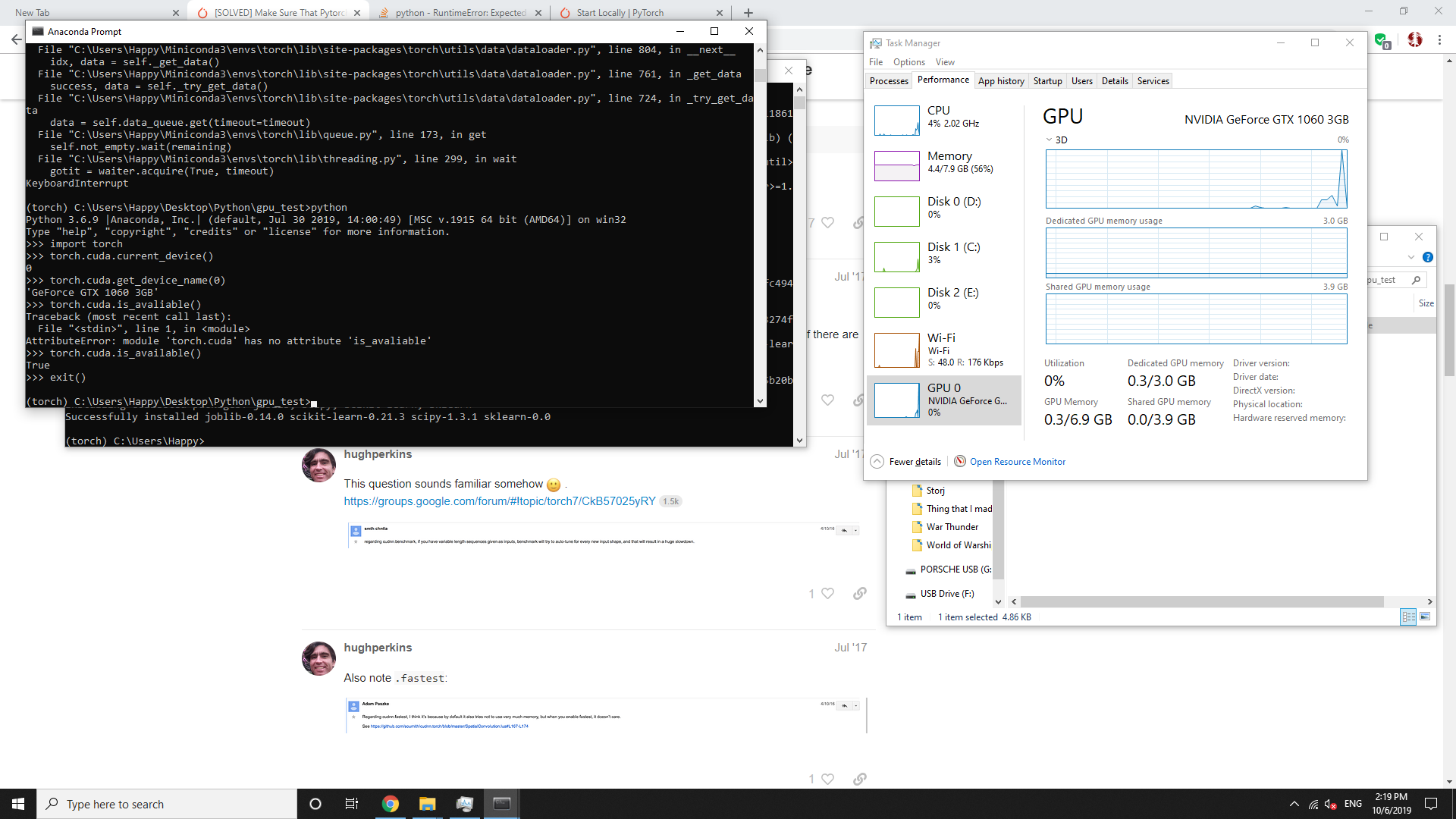

python - Why RandomForestClassifier on CPU (using SKLearn) and on GPU (using RAPIDs) get differents scores, very different? - Stack Overflow

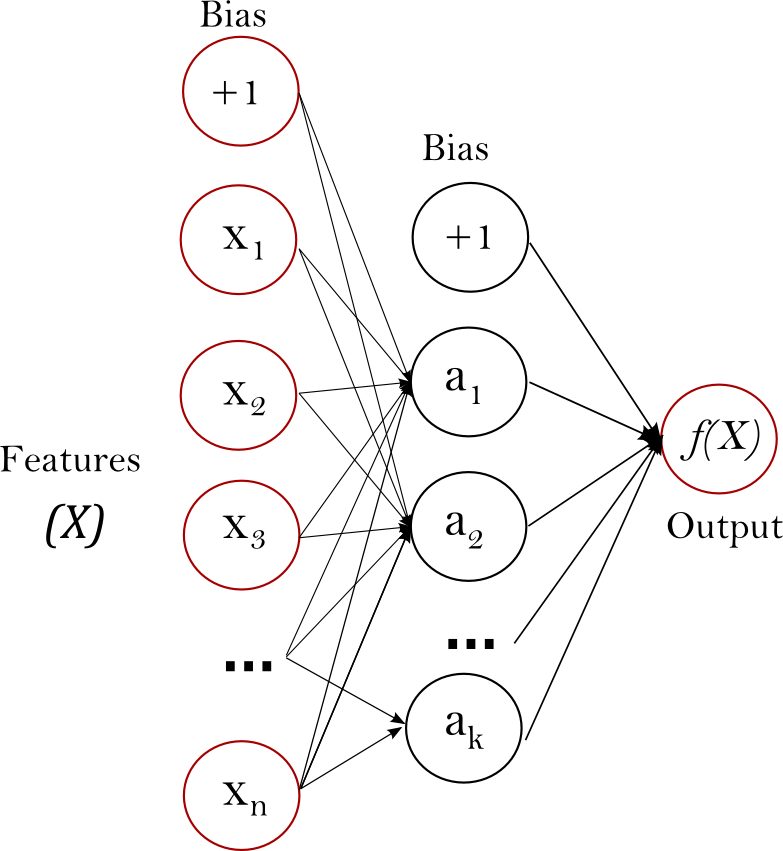

![P] Sklearn + Statsmodels written in PyTorch, Numba - HyperLearn (50% Faster, Learner with GPU support) : r/MachineLearning P] Sklearn + Statsmodels written in PyTorch, Numba - HyperLearn (50% Faster, Learner with GPU support) : r/MachineLearning](https://external-preview.redd.it/0vkIZuDUeAORDzu8tZ0yo_ZWllCeDcZOsQDWCuIdR6s.jpg?auto=webp&s=f38037f1c5746b8b670e2feb8f8551af7e1643b7)